1. Dataset Generator: Dot or No-Dot

1.1 Description

One of the great problems in the realm of Domain Generalization is generalizing the idea of numbers such that I could train a machine learning model on a data set like MNIST then use that model to accurately classify another number dataset like USPS or SHVN. Current models struggle to generalize since they are unable to capture the abstract idea of number. When a model is trained on a number set, its description of a number is rooted in size, color, shape, and location. So when the model is tested on another dataset where the representation of a number may vary in the aforementioned qualities, the model fails. So the question is, can we develop a model to generalize.

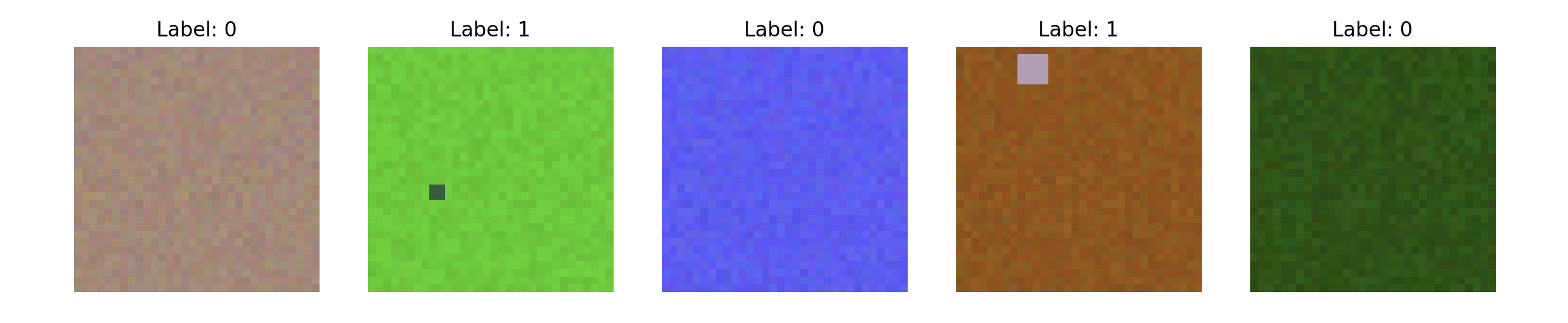

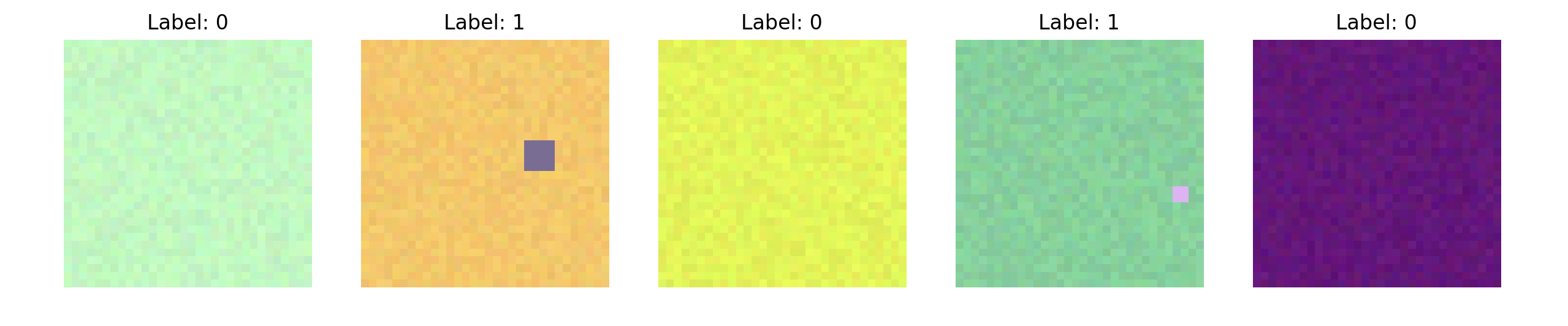

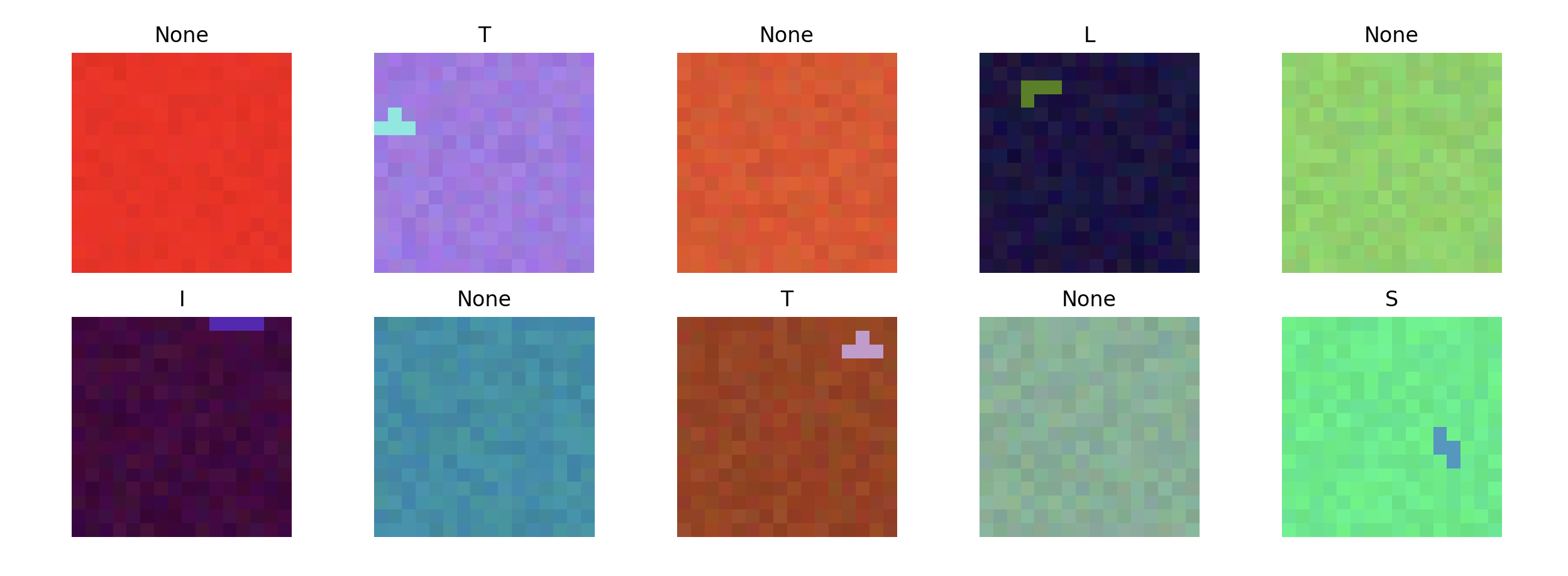

I realized early on that to find such a model it is first worth simplifying the data set so that the object being abstracted is simple. So I came up with the idea of the Dot or No-Dot data set. The code I have above generates images of specified dimension and quantity, half of which have a dot and the other half does not. The images that have no dot are a randomly selected gradient. In images with a dot, I randomly vary the background color, the background gradient, the dot color, the dot location, and the dot size. As a result, in each image the idea of a dot or no dot is represented differently, hence a model that is able to classify the images correctly has abstracted the idea of dot invariant of color, size and location. The motivation is that such a model may also be able to abstract the idea of a number.

1.2 Code

import numpy as np

import matplotlib.pyplot as plt

from random import randint, choice

def generate_mixed_dataset(num_images):

images = [] # Store generated images

labels = [] # Store labels for the images

for _ in range(num_images // 2):

# Create a homogeneous background image

base_color = np.random.randint(0, 256, 3) # Pick a random color

homogeneous_image = np.zeros((32, 32, 3), dtype=np.uint8)

for x in range(32):

for y in range(32):

# Add some random variation to the color

variation = np.random.randint(-10, 11, 3)

pixel_color = np.clip(base_color + variation, 0, 255)

homogeneous_image[x, y] = pixel_color

images.append(homogeneous_image)

labels.append(0) # 0 for no dot

# Create an image with a dot on a gradient background

base_color = np.random.randint(0, 256, 3) # Again, pick a random color

gradient_image = np.zeros((32, 32, 3), dtype=np.uint8)

for x in range(32):

for y in range(32):

# Make a gradient variation

variation = np.random.randint(-10, 11, 3)

pixel_color = np.clip(base_color + variation, 0, 255)

gradient_image[x, y] = pixel_color

dot_size = randint(1, 4) # Choose a random size for the dot

x = randint(0, 32 - dot_size) # Random position for the dot

y = randint(0, 32 - dot_size)

dot_color = np.random.randint(0, 256, 3) # Random color for the dot

# Make sure the dot color stands out

while np.any(np.abs(dot_color - gradient_image[x, y]) < 20):

dot_color = np.random.randint(0, 256, 3)

for i in range(dot_size):

for j in range(dot_size):

gradient_image[x + i, y + j] = dot_color # Place the dot

images.append(gradient_image)

labels.append(1) # 1 for dot present

return images, labels

Brief overview of the coding aspects of the project or links to code repositories...

1.3 Images